Lab 4: Performance Measurement

Overview

This lab will walk you through the tools to measure your program's performance and use of CPU and memory resources, as well as identify specific locations in the code where performance bottlenecks may exist. In future labs, we will work on optimizing program performance through a variety of compiler and human methods.

Pre-Lab

A boilerplate array sort program has been written for you to build upon in this prelab. An example bubble sort function has already been implemented. You need to extend the existing code to add the selection sort and merge sort algorithms into the empty functions that already exist in the source code.

To begin this lab, start by obtaining the necessary boilerplate code. Enter the class repository:

unix> cd ~/bitbucket/2012_fall_ecpe170_boilerplate/

Pull the latest version of the repository, and update your local copy of it:

unix> hg pull

unix> hg update

Copy the files you want from the class repository to your private repository:

(In this case, it is one folder you want)

unix> cp -R ~/bitbucket/2012_fall_ecpe170_boilerplate/lab04/prelab ~/bitbucket/2012_fall_ecpe170/lab04

Enter your private repository now, specifically the lab04 folder:

unix> cd ~/bitbucket/2012_fall_ecpe170/lab04

Add the new files to version control in your private repository:

(Technically, prelab is a directory, but Mercurial is smart enough to just add all the files in these directories with this command)

unix> hg add prelab

Commit the new files in your personal repository, so you can easily go back to the original starter code if necessary

unix> hg commit -m "Starting Lab 4 with boilerplate code"

Push the new commit to the bitbucket.org website

unix> hg push

Enter the prelab folder

unix> cd prelab

You should see the following code:

- Makefile that can compile the complete program, including the correct GCC settings to enable the C99 language standard. (Needed since we use the "bool" type that was not present in the original C standard)

- main() function in sorting.c

- Helper functions in helper_functions.c:

- Initialize array with random data

- Verify that array has been properly sorted

- Bubble sort algorithm

- Empty functions in your_functions.c

- Selection sort - Implement your code here!

- Merge sort - Implement your code here!

Pre-lab Assignment:

(1) Implement the remaining two sort algorithms (selection sort and and merge sort) in the file your_functions.c.

(2) Run all three algorithms: bubble sort, selection sort, and merge sort and ensure that the verification function reports success for each algorithm

(3) Check your code (including Makefile) into version control, and push to BitBucket.

Warning: ***Zero*** points will be awarded for missing source code files that were never added to version control, broken Makefiles, or other any errors that prevent your program from compiling/running! (You might want to do a test checkout to a different directory and verify that you can still compile your program)

Checkpoint to obtain pre-lab credit - due by start of class:

(1) Show me the output of your program when run with the selection sort and merge sort options.

(2) Show me the BitBucket page that lists recent commits, and point out the commit that uploaded your final code for this program.

Program usage instructions:

Compile the program:

unix> make

Run the bubble sort routine.

unix> ./sorting_program bubble

Note the program output, particularly the self-test at the end that verifies the sorting function worked correctly.

Initializing 100000 elements in array...

Using bubble sort algorithm...

Verifying array sorting...

Congrats! Array is sorted correctly

A note for students with old computers: **One minute** is plenty of execution time for the bubble sort. On my laptop, that is about 100,000 array elements. If that array size takes too long on your computer, feel free to experiment with a smaller array size (50,000? 25,000? 12,500?) and change it in the file sorting.c. I'm interested in the relative performance difference, not the absolute numbers.

Lab Part 1 - CPU Usage

Assuming you have completed the pre-lab and can run all three sort algorithms correctly, let's decide which is "best". First up - performance! How long does it take to run?

Use the time utility to measure program execution time. This program works as follows: time <command to measure>. To measure your sorting algorithms, use:

unix> time ./sorting_program bubble

unix> time ./sorting_program selection

unix> time ./sorting_program merge

For the first command, the utility you are running is called "time". Everything after that is the argument that you give to the time program, i.e. "./sorting_program bubble". The time program will take this argument and use it as the command being measured.

This utility will report three metrics:

- "Real" time is the total time (equivalent to your stopwatch)

- "User" time is the time spent running your user application code

- "Sys" time is the time the Linux kernel spent servicing your application code

When you use the time command on real programs (beyond this toy sorting example program), the real time may be much larger than the sum of the user and system time. This is OK. The most likely explanation is that the program was waiting for the disk to retrieve data, and thus the CPU was idle. An idle CPU does not count towards user or system time, but the real time (i.e. wall clock) is still ticking away!

Lab Report:

(1) Create a table that shows the real, user, and system times measured for each of the three algorithms

(2) In the sorting program, what actions take user time?

(3) In the sorting program, what actions take kernel time?

(4) Which sorting algorithm is fastest? (It's so obvious, you don't even need a timer)

Lab Part 2 - CPU Usage with Valgrind

Although we've measured how much time our program takes to execute, we don't know where in the code that time is used. (For the trivial sorting program, you can make a good guess, but guessing might be less effective on a larger program, and you might guess wrong!) To see what code is taking the most time, you need to profile your program. There are many tools that can do this, but unfortunately some of the more common on Linux (OProfile and Perf) don't work inside a virtual machine because they tap into low-level processor features that are not virtualized. Fortunately, one tool does function correctly: Valgrind. In this section, we will use Valgrind to annotate your source code with the fraction of time each line spends executing while your program runs.

Valgrind operates by converting your program (in real time) to run on a simulated CPU, and then simulates it. This is similar to how Java programs are compiled to the Java virtual machine (a simulated machine), and then executed. Although this process is relatively slow, it allows Valgrind to record cycle-by-cycle information about the behavior of your program.

Valgrind requires that the program to be profiled be built using the -g option to ensure that the program includes debugging information linking specific instructions in the compiled program back to the original lines in the source code. Make the following additions of -g to the Makefile:

CFLAGS=-c -Wall -std=c99 -g

After that, clean out the old program and recompile:

unix> make clean

unix> make

The valgrind program works as follows: valgrind <valgrind options> <program to monitor>. We have a lot of options for Valgrind here:

- --tool=callgrind : Callgrind is the name of the call-graph module in Valgrind (a call-graph is a list of what function called what other functions). Enable this module.

- --dump-instr=yes : Configures Callgrind to perform event counting at the source line granularity (allowing for source code annotation later).

- --callgrind-out-file=callgrind.out : By default, Callgrind puts logging information in a different file name each time (based on the program ID number). It's easier for this lab to specify a constant file name.

To process the log file into human readable results, the callgrind_annotate utility is run after valgrind in order to display the output.

- --auto=yes : The annotate program will attempt to find the original source code, and label each line with execution time

- --threshold=100 : The annotate program will list all functions consuming resources, even if they took minimal time. (Setting a lower threshold, like 90, indicates that you are only interested in the top 90% of lines consuming CPU resources)

- The output of this program can then by redirected into a file by using the output redirection operator: >

To put this all together, the commands you would use to run Valgrind on your bubble sort program is:

unix> valgrind --tool=callgrind --dump-instr=yes --callgrind-out-file=callgrind.out ./sorting_program bubble

<< This will take a long time to finish! 5x slower in Valgrind >>

unix> callgrind_annotate --auto=yes --threshold=100 callgrind.out > callgrind_results.txt

unix> gedit callgrind_results.txt &

In the output file, callgrind is reporting activity at the Instruction Read (IR) level. In other words, each time a new instruction is loaded by the processor, callgrind records it. These instructions are *not* lines of code in your program. Rather, they are assembly-level instructions that the compiler produced from your code. (We'll cover assembly programming later in this semester). Even the simplest lines in C are almost always composed of more than one assembly instructions. Thus, you should not expect to see counts of "1" instruction in the output file.

In the first section of the output file, each line shows the cumulative number of Instruction Reads (IRs) for each function of your program, sorted from most popular to least popular.

In the second section of the output file, the source code for your program is annotated on a line-by-line basis, showing the number of instruction reads required to execute that line. In the case of functions, the IR count is the cumulative count of that function and all functions it calls. Note that not every line of (assembly) code executed can be annotated. At the bottom of the report is a list of files that the annotate tool could not locate the source code for. Notice that these are all library functions. (The source code certainly exists somewhere online, but probably not on your computer. And the libraries are likely compiled without debugging support, to improve performance, which makes annotation impossible).

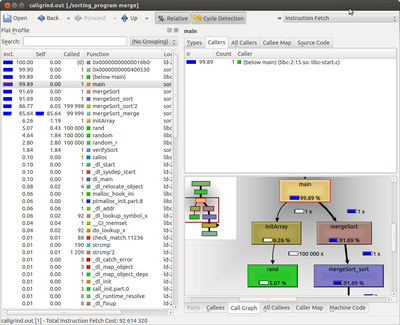

The plain text output file of callgrind_annotate, although useful, is a bit unwieldy to use, especially as the program complexity increases. An alternate approach to viewing the same callgrind data is offered by a GUI tool: kcachegrind. To run this utility:

unix> kcachegrind callgrind.out &

Explore the GUI on your own to get a feel for its operation. When ready, your task is:

Run Callgrind separately for the bubble, selection, and merge sort algorithms. Using either the plain text output or the GUI tool (as appropriate), answer the following lab report questions below.

Tip 1: If you create a separate output file for each profile, you'll avoid having to re-run the profiler multiple times per algorithm.

Tip 2: Read all of the requirements for this section of the lab report first. You can combine several requirements together to save time.

Tip 3: The callgrind tool is *very* CPU intensive. I estimate it takes 10 times longer to run a program with profiling than without profiling. For this section, you are permitted to decrease the size of the array to be sorted by 1/2, 1/4, 1/8, etc... by changing the #define in sorting.c provided that you (a) use a consistent size across all three programs, and (b) document the size in your lab report, so I'm not left wondering why your total instruction counts are so much smaller than your classmates!

Tip 4: I expect the bubble sort to take forever in Valgrind (forever = 10 minutes). You could start work on the lab 5 pre-lab (answering questions about your system configuration) while Valgrind runs. (Yes, it will distort your measurements a bit using the time command, but we already expect bubble sort to yield terrible performance, so it won't make a noticeable difference....)

Lab Report:

(5) Create a table that shows the total Instruction Read (IR) counts for all three programs. (In the text output format, IR is the default unit used. In the GUI program, un-check the "% Relative" button to have it display absolute counts of instruction reads).

(6) Create a table that shows the top 3 most active functions for all three programs by IR count (excluding main()) - Sort by the "self" column if you're using kcachegrind, so that you see the IR counts for *just* that function, and not include the IR count for functions that it calls.

(7) Create a table that shows, for all three programs, the most CPU intensive line that is part of the most CPU intensive function. (i.e. First, take the most active function for a program. Second, find the annotated source code for that function. Third, look inside the whole function code - what is the most active line? If by some chance it happens to be another function, drill down one more level and repeat.)

(8) Using the "Screenshot" program in Ubuntu, include a screen capture that shows the kcachegrind utility displaying the callgraph for the merge sort algorithm, starting at the main() function, and going down (into the sort algorithm) to its deepest point.

(9) The Valgrind utility is very CPU intensive. This low-level monitoring of your program does not come for free! Use the time utility to compare the execution time of the bubble sort program both with and without Valgrind massif monitoring. Create a table that shows the difference in time. Also show the exact commands you used to perform the measurement.

Lab Part 3 - Memory Usage with Valgrind

In addition to CPU usage, memory usage is another key factor in comparing algorithm designs. Valgrind, as part of its ability to monitor program execution, can also report statistics on program memory usage.

Valgrind requires that the program to be profiled be built using the -g option to ensure that the program includes debugging information linking specific instructions in the compiled program back to the original lines in the source code. Ensure that your Makefile still has the -g flag present, as directed previously.

The valgrind program works as follows: valgrind <valgrind options> <program to monitor>. We have a lot of options for Valgrind here:

- --tool=massif : Massif is the name of the memory profiler module in Valgrind. Enable this module.

- --stack=yes : Massif by default only monitors "heap" memory (used for dynamic allocation). Our sorting program also has local variables in the function that are statically allocated on the stack, including the huge array to be sorted. Thus, tell Massif to include stack memory usage too.

- --detailed-freq=1000000 : The "detailed traces" of Massif show what functions are consuming the Heap memory. We don't need that functionality to find the total memory usage. Setting this to a really big number means it won't run a detailed trace during the lifetime of our small program.

- --massif-out-file=massif.out : By default, Massif puts logging information in a different file name each time (based on the program ID number). It's easier for this lab to specify a constant file name.

To process the log file into human readable results, the ms_print utility is run after valgrind. The results of this utility are redirected into the file massif_results.txt.

To put this all together, the commands you would use to run Valgrind on your bubble sort program is:

unix> valgrind --tool=massif --stacks=yes --detailed-freq=1000000 --massif-out-file=massif.out ./sorting_program bubble

unix> ms_print massif.out > massif_results.txt

unix> gedit massif_results.txt &

The output display is described in detail in the program documentation. But briefly, you should see a table displayed with the following columns:

- n : The snapshot number, 1 being the first time Massif scanned your program

- time(i) : The number of elapsed instructions in your program

- useful-heap(B) : The number of bytes of memory that your program has requested (via dynamic memory allocation)

- extra-heap(B): Any extra bytes that were given to your program by the operating system (think of this as "overhead")

- stacks(B): The number of bytes of memory that your program is using on the stack. This includes the functions from main() to the current point in the code, and all of the static variables in those functions.

Restore the array size in sorting.c to its original value (or the smaller original value, if run on a slower computer). Include the array size in the lab report for the following questions:

Lab Report:

(10) Document the total memory, heap memory, and stack memory used for the bubble sort program at three points: The first snapshot, a middle snapshot, and the last snapshot.

(11) Document the total memory, heap memory, and stack memory used for the selection sort program at three points: The first snapshot, a middle snapshot, and the last snapshot.

(12) Document the total memory, heap memory, and stack memory used for the merge sort program at three points: The first snapshot, a middle snapshot, and the last snapshot.

(13) Why does the merge sort use heap memory, while the bubble and selection sort programs do not? Support your answer with a specific line from the program source code.

Lab Part 4 - Memory Leaks with Valgrind

One of the most common uses for the Valgrind tool is to monitor programs while they are running, and detect possible memory-related errors. The Memcheck module in Valgrind can detect the following errors:

- Program accesses memory it shouldn't (e.g., areas not yet allocated, areas that have been freed, areas past the end of heap blocks, inaccessible areas of the stack)

- Program uses uninitialized values in dangerous ways

- Program leaks memory

- Program frees memory in improper ways (e.g., double frees, mismatched frees)

- Program passes overlapping source and destination memory blocks to memcpy() and related functions

For this simple demo, we are going to create a memory leak, and then watch Valgrind detect it. A memory leak is when a programmer has dynamically allocated some piece of memory, but forgot to return it to the operating system when finished. This memory cannot be used by another program, and thus is "lost".

To create our memory leak, edit the file sorting.c and comment out the following line:

// free(temp_array);

Valgrind requires that the program to be profiled be built using the -g option to ensure that the program includes debugging information linking specific instructions in the compiled program back to the original lines in the source code. Ensure that your Makefile still has the -g flag present, as directed previously.

Recompile your program to include the memory leak:

unix> make

The valgrind program works as follows: valgrind <valgrind options> <program to monitor>. We have a lot of options for Valgrind here:

- --tool=memcheck : Memcheck is the name of the memory usage module in Valgrind. Enable this module.

- --leak-check=yes : Search for memory leaks when the program exits.

- --show-reachable=yes : Display all suspicious blocks of memory.

- --num-callers=20 : The depth of the stack trace to print out. Increase for very complex programs.

- --log-file=memcheck.txt : Save the analysis information to a log file on disk. The program output still goes to the console.

To put this all together, the commands you would use to run Valgrind on your merge sort program is:

unix> valgrind --tool=memcheck --leak-check=yes --show-reachable=yes --num-callers=20 --log-file=memcheck.txt ./sorting_program merge

unix> gedit memcheck.txt &

The output display is described in detail in the program documentation. But briefly, you should (at a minimum) see an entry in the "HEAP SUMMARY" section reading "#### bytes in 1 blocks are definitely lost in loss record 1 of 1", followed by a stack trace highlighting where that memory was originally allocated in your program.

When finished, clean up the memory leak (edit the file sorting.c and uncomment the following line):

free(temp_array);

Re-compile your program (via make), re-run Valgrind and verify that the output file is now clean. You should see a message like this at the bottom of the output log:

HEAP SUMMARY:

in use at exit: 0 bytes in 0 blocks

total heap usage: 1 allocs, 1 frees, ###### bytes allocated

All heap blocks were freed -- no leaks are possible

For counts of detected and suppressed errors, rerun with: -v

ERROR SUMMARY: 0 errors from 0 contexts

Lab Report:

(14) Show the Valgrind output file corresponding to the intentional memory leak. Clearly highlight the line where Valgrind identified where the block was originally allocated.

(15) How many bytes were leaked in the buggy program?

(16) Show the Valgrind output file after you fixed the intentional leak.

(17) Is the Valgrind output report now clean (0 leaks, 0 other errors), or do you have other problems in the code you produced in the pre-lab?

If you are unlucky enough to have other errors, you don't have to *fix* them. But, you do have to look in the Valgrind documentation and *understand/explain* what each error means, and speculate on their cause in your code. (For example, I didn't realize that my original merge sort algorithm was accessing the element *1 position before* the start of the array. It didn't crash, but it was certainly clobbering memory it wasn't supposed to!)

Post-Lab

For this postlab, you are going to exercise the same time / Valgrind (callgrind) skills on a larger non-trivial software program: the GNU wget utility. You need to download the source code for this utility, compile, run, and profile it during normal operation.

Create a new postlab folder for lab04:

unix> cd ~/bitbucket/2012_fall_ecpe170/lab04/

unix> mkdir postlab

unix> cd postlab

Download the current source code. Although we could use Firefox, it's easier to use the wget utility already installed:

(Yes, I know, we're downloading the source code to a program that we already have a binary for. But we need the original source code, and the binary file produced from that source code, in order to profile it).

unix> wget http://ftp.gnu.org/gnu/wget/wget-1.13.tar.gz

Untar / ungzip the archive

unix> tar -xzf wget-1.13.tar.gz

Enter the newly created directory containing the source code.

Get a directory listing. Note that there is no Makefile provided - not to worry! For most large programs, you run a configure script, which produces a Makefile tailored to your specific system and installed tools.

Run the included configure script with the following options:

- --with-ssl=no: Disable SSL support in wget , since we don't have the right libraries pre-installed to compile this part of the larger program successfully.

- CFLAGS="-O2 -Wall -g" : Remember the CFLAGS variable in your Makefile? Here, we're asking the configure script to set the CFLAGS variable in all the Makefiles it creates to this specific value. (All I did was run configure without this option, look at the Makefile produced and see that it set CFLAGS to -O2 -Wall. Then, I added the -g option so that Valgrind (callgrind) can annotate individual lines in the source code.)

- -Wall enables all warnings

- -O2 sets the optimization to level 2

- -g includes Debugging symbols in the compiled executable, making it bigger, but also much easier to understand!

unix> ./configure --with-ssl=no CFLAGS="-O2 -Wall -g"

The output of the configure script is a Makefile (several Makefiles in different directories, actually!)

Compile the program and watch the output fly across the screen:

unix> make

The output of compilation will be an executable file called "wget" that is saved under the "src/" subdirectory.

Warning: Your system already had the wget program installed in the /usr/bin/wget directory. After this make completes, you will have two wget's: One in the system directory, and one here, in your home directory. For this post-lab, you need to be specific about which wget you want to use. If you simply say "wget", the system will default to the one in /usr/bin/wget, which does NOT have the debugging information included or source code available.

Download the file http://www.pacific.edu/Documents/registrar/acrobat/2011-2012catalog.pdf using your new wget program, and verify that it functions as expected.

unix> ./src/wget http://www.pacific.edu/Documents/registrar/acrobat/2011-2012catalog.pdf

Repeat the same command with the profiling tools, and complete the lab report.

Post-Lab Report:

(1) How many seconds of real, user, and system time were required to complete the download? Document the command used to measure this.

(2) Why does real time != (user time + system time)?

(3) How big is the downloaded file on disk in bytes?

(4) What was the maximum heap memory usage used by the wget program at any time? Document the commands used to measure this.

(5) Excluding main() and everything before it, what are the top three functions run by wget when downloading the file when you do count sub-functions in the total? What percent of the time are they running? Document the commands used to measure this and include a screen capture supporting your answer. (Tip: The "Incl" column in kcachegrind should be what you want)

(6) What are the top three functions run by wget when downloading the file when you don't count sub-functions in the total? What percent of the time are they running? (Tip: The "Self" column in kcachegrind should be what you want). Include a screen capture supporting your answer.

(7) Include a screen capture that shows the kcachegrind utility displaying the callgraph for the download, starting at main(), and going down for several levels.

(8) Find fwrite() in the profile. (It should be near the top of the sorted list). It seems to be relatively important, as you might imagine for a program that is writing a file to disk. What function calls fwrite()?

Mercurial Cleanup:

We have not checked wget into version control, and there is really no point in doing so, as it's a standard program, and we haven't modified its source code. Unfortunately, now if you run hg status, you will see a huge list of untracked files. What a mess! Let's tell Mercurial to ignore this directory.

Create a special file called .hgignore in your repository root folder.

unix> cd ~/bitbucket/2012_fall_ecpe170

unix> gedit .hgignore &

Put the following regular expression in the file. It matches starting from the repository root (indicated by ^) anything containing /lab04/postlab/wget-1.13/ Any matching is ignored by Mercurial.

^lab04/postlab/wget-1.13/

Now, add this file to Mercurial, and run hg status. It should be much cleaner now.

unix> hg add .hgignore

unix> hg status

Post-Lab Report - Wrapup:

(1) What was the best aspect of this lab?

(2) What was the worst aspect of this lab?

(3) How would you suggest improving this lab in future semesters?