CommonCrawl Tutorial

See also: New tutorial (updated for Spring 2016)

This tutorial is based on Steve Salevan's blog post MapReduce for the Masses: Zero to Hadoop in Five Minutes with Common Crawl. But, that original tutorial used the web interface for Amazon Elastic MapReduce, which is not accessible to your sub-accounts created via the Amazon Identity and Access Management (IAM) feature. Since I'm not giving you my own Amazon password (sorry...), this new tutorial uses a command-line interface to Elastic MapReduce (link 1, link 2) that is compatible with IAM accounts. While it's a little more work to setup, it's a better method for dealing with larger, complicated projects.

Install Development Tools

Install Eclipse (for writing Hadoop code)

- Windows and OS X: Download the “Eclipse IDE for Java developers” installer package located at: http://www.eclipse.org/downloads/

- Linux: Run the following command in a terminal

- RHEL/Fedora

sudo yum install eclipse - Ubuntu/Debian

sudo apt-get install eclipse

Install Java 6 SDK - Java 7 is not compatible with Amazon's systems yet!

Install Git (for retrieving the tutorial application)

- Windows: Install the latest .exe from: http://code.google.com/p/msysgit/downloads/list

- To use Git later, go to the Start Menu, find Git, and then choose "Git Bash"

- OS X: Install the appropriate .dmg from: http://code.google.com/p/git-osx-installer/downloads/list

- Linux: Run the following command in a terminal

- RHEL/Fedora

sudo yum install git - Ubuntu/Debian

sudo apt-get install git

Install Ruby 1.8

- Windows: Install the latest 1.8.x .exe from http://rubyforge.org/frs/?group_id=167&release_id=28426

- Select the option to add Ruby to your path.

- To use Ruby later (for the Amazon command-line interface), go to the Start Menu, find Ruby, and then choose "Start Command Prompt with Ruby". Then, all of your commands will be of the format "ruby command", e.g. "ruby elastic-mapreduce --help"

- Linux: Run the following command in a terminal

- RHEL/Fedora

sudo yum install ruby-full - Ubuntu/Debian

sudo apt-get install ruby-full

Install Amazon Elastic MapReduce Ruby Client from http://aws.amazon.com/developertools/2264

- Follow the link and download the .zip file

Create a new directory to hold the tool:

mkdir elastic-mapreduce-cli

- Uncompress the .zip file into the folder you just created

Get your Amazon Web Services Credentials

Login to Sakai and check the course Dropbox to find a text file containing your super-secret login information. These accounts are linked to the master (instructor) account, which centralized billing for the course. This utilizes Amazon's Identity and Access Management (IAM) feature, which was designed to allow multiple employees of a company to work together on Amazon's cloud services without the need to share a single username and password.

Following the super-secret login link contained in the text file, login to the Amazon Web Services console (via your web browser).

Configure a keypair for Amazon's Elastic Compute Cloud (EC2) service:

- Select the EC2 tab in the web control panel.

- Create a keypair for your account. Remember the name of this keypair - you'll need it next! Save the precious .pem download file to your home directory or some place where you will remember its location later.

Although we won't use it directly, this keypair would allow you to SSH directly into the EC2 Linux compute nodes spawned as part of this tutorial.

Create a bucket (aka "folder") in Amazon's Simple Storage Service (S3)

- Select the S3 tab in the web control panel.

- Click "Create Bucket", give your bucket a name, and click the "Create" button. Bucket names must be unique across both the whole class account and the entire world! So, something like "yourusername-commoncrawl-tutorial" is a good choice. Remember this bucket name!

Configure Amazon Elastic MapReduce Ruby Client

The client needs access to your Amazon credentials in order to work. Create a text file called credentials.json in the same directory as the ruby client, i.e. elastic-mapreduce-cli/. Into this file, place the following information, customized for your specific account and directory setup. Note that this example is pre-set to the us-east-1 region, since that is where the CommonCrawl data is based.

{

"access_id": "[YOUR AWS secret key ID]",

"private_key": "[YOUR AWS secret key]",

"keypair": "[YOUR key pair name]",

"key-pair-file": "[The path and name of YOUR PEM file]",

"log_uri": "s3n://[YOUR bucket name in S3]/mylog-uri/",

"region": "us-east-1"

}

Compile and Build HelloWorld JAR

Now that you’ve installed the packages you need to play with their example code, run the following command from a terminal/command prompt to pull down the code (Windows users - run this in your Git Bash shell):

# git clone git://github.com/ssalevan/cc-helloworld.git

Next, start Eclipse. Open the File menu then select “Project” from the “New” menu. Open the “Java” folder and select “Java Project from Existing Ant Buildfile”. Click Browse, then locate the folder containing the code you just checked out (if you didn’t change the directory when you opened the terminal, it should be in your home directory) and select the “build.xml” file. Eclipse will find the right targets, and tick the “Link to the buildfile in the file system” box, as this will enable you to share the edits you make to it in Eclipse with git.

We now need to tell Eclipse how to build our JAR, so right click on the base project folder (by default it’s named “Hello World”) and select “Properties” from the menu that appears. Navigate to the Builders tab in the left hand panel of the Properties window, then click “New”. Select “Ant Builder” from the dialog which appears, then click OK.

To configure our new Ant builder, we need to specify three pieces of information here: where the buildfile is located, where the root directory of the project is, and which ant build target we wish to execute. To set the buildfile, click the “Browse File System” button under the “Buildfile:” field, and find the build.xml file which you found earlier. To set the root directory, click the “Browse File System” button under the “Base Directory:” field, and select the folder into which you checked out our code. To specify the target, enter “dist” without the quotes into the “Arguments” field. Click OK and close the Properties window.

Code change as of 3/11/12: CommonCrawl moved on Amazon's S3 service. Verify that your project has the current bucket name. Locate the HelloWorld.java file (inside src/). There should be a line near the top setting the CC_BUCKET variable. Ensure that the line is as follows: private static final String CC_BUCKET = "aws-publicdatasets";

Finally, right click on the base project folder and select “Build Project”, and Ant will assemble a JAR, ready for use in Elastic MapReduce.

Upload the HelloWorld JAR to Amazon S3

Go back to the web console interface to S3. Select the new bucket you created previously. Click the “Upload” button, and select the JAR you built in Eclipse. It should be located here:

<your checkout dir>/dist/lib/HelloWorld.jar

Tip: A stand-alone S3 file browser on your computer might make this easier in the future. Further, it would allow you to explore outside of your own buckets. For example, you could explore the CommonCrawl datasets at s3://aws-publicdatasets/common-crawl/crawl-002/

- Mac: CyberDuck: http://cyberduck.ch/

- Windows: S3Browser: http://s3browser.com/ or Firefox extension S3Fox: http://www.s3fox.net/

- Linux: There must be something...

Create an Elastic MapReduce job based on your new JAR

Now that the JAR is uploaded into S3, all we need to do is to point Elastic MapReduce to it. The original tutorial uses the web interface to accomplish this, but as serious programmers we will do it via the command-line interface. (Plus, you have no choice, since Amazon doesn't support it yet for your account type).

From inside the Ruby client directory you created previously, use the following commands:

Get a list of all commands - Helpful for future reference and debugging! (Windows users, run this in your Ruby shell)

./elastic-mapreduce --help

Create a job flow - A job can contain many different MapReduce programs to execute in sequence. We only need one today.

./elastic-mapreduce \

--create \

--alive \

--name "[Human-friendly name for this entire job]" \

--num-instances 3 \

--master-instance-type m1.small \

--slave-instance-type m1.small \

--bootstrap-action s3://elasticmapreduce/bootstrap-actions/install-ganglia

Important note: The --alive argument tells Amazon that you want to keep your EC2 servers running even when the specific tasks finish! While this saves a lot of time in starting up servers while doing development/testing, it will cost you $$ if you forget to turn them off when you truly are finished!

Add a step to the job flow - This will add your specific MapReduce program to the job flow.

./elastic-mapreduce --jobflow [job flow ID produced from --create command previously]

--jar s3n://[S3 bucket with program]/HelloWorld.jar \

--arg org.commoncrawl.tutorial.HelloWorld \

--arg [Your AWS secret key ID] \

--arg [Your AWS secret key] \

--arg common-crawl/crawl-002/2010/01/07/18/1262876244253_18.arc.gz \

--arg s3n://[S3 bucket with program]/helloworld-out

What are we doing with this step? We're telling Amazon that we want to run a .jar file for our MapReduce program, and we're providing command-line arguments (--arg) to that program as initial values. The arguments include:

- Run the main() method in our HelloWorld class (located at org.commoncrawl.tutorial.HelloWorld

- Log into Amazon S3 with your AWS access codes. The CommonCrawl data is pay per access - Amazon needs to know who to bill! (Note: It's free if you access it from inside Amazon, but a login is still required)

- Count all the words taken from a chunk of what the web crawler downloaded at 6:00PM on January 7th, 2010. Note that CommonCrawl stores its crawl information as GZipped ARC-formatted files, and each one is indexed using the following directory scheme: /YYYY/MM/DD/the hour that the crawler ran in 24-hour format/*.arc.gz

- Output the results as a series of CSV files into your Amazon S3 bucket (in a directory called helloworld-out)

If you prefer to run against a larger subset of the crawl, you can use directory prefix notation to specify a more inclusive set of data. I wouldn't recommend it for the tutorial! But for later reference:

- 2010/01/07/18/ - All files from this particular crawler run (2010, January 7th, 6PM). Warning: The trailing slash is crucial! Otherwise, the last part (e.g. hour "18" in the example) will be ignored.

- 2010/ - All crawl files from 2010

Now, your Hadoop job will be sent to the Amazon cloud.

Tip: Want to specify non-contiguous subsets of the crawl? Use a comma to separate different directory prefixes. For example, the following prefix argument, when used to add a step to your jobflow, will process two separate ARC files that happen to have 1 more ARC file between them (which is not processed). Warning: Don't use a space around the comma!

--arg 2010/01/07/18/1262876244253_18.arc.gz,2010/01/07/18/1262876256800_18.arc.gz

Watch the show

It will take Amazon some time (5+ minutes?) to spin up your requested instances and begin processing. If you had direct web access to the Elastic MapReduce console, you could watch the status there. Instead, you can learn much the same thing via the following tools:

- Command-line, i.e. ./elastic-mapreduce --list

- Web interface - Select the EC2 tab. Have your servers been started yet?

- Web interface - Select the S3 tab.

- Are there log files (standard output, standard error) in the mylog-uri folder?

- Has your output directory been created yet? (That's a very good way to know it finished!). You can download the output files via the S3 web interface if you want to inspect them.

- Hadoop web console - NOT the Amazon console, but a Hadoop-specific console - See below

- Ganglia cluster performance monitor - See below

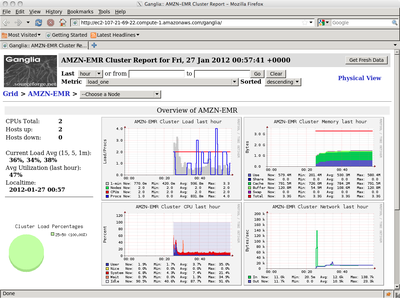

Advanced Topics - Watch the show with Ganglia

Ganglia is a monitoring tool that allows you to see the memory usage, CPU utilization, and network traffic of the cluster. You already enabled Ganglia when you created the job flow that launched the EC2 virtual machines. Although this tool is web accessible, it is located behind Amazon's firewall, so you can't access it directly.

Obtain the public DNS address of the Hadoop master node. It should be something like ec2-######.amazonaws.com:

./elastic-mapreduce --list

Using SSH, listen locally on port 8157 and create an encrypted tunnel that connects between your computer and the Hadoop master node. We'll use this tunnel to transfer web traffic to/from the cluster. Rather than using a username/password, this command uses the public/private key method to authenticate. (Windows users: You'll have to use the PuTTY client and check the right checkboxes to accomplish this same task)

ssh -i [path to .pem key file] -ND 8157 hadoop@ec2-#######.compute-1.amazonaws.com

You won't see any messages on the screen if this works. Leave SSH running for as long as you want the tunnel to exist. Note that, if you left off the "-ND 8157" part of the command, this would give you SSH command-line access to the master server to do whatever you want! Remember, Elastic MapReduce isn't magic; it's just doing the work of configuring a virtual machine and installing software automatically in it.

You need to configure your web browser to access URLs of the format *ec2*.amazonaws.com* --or-- *ec2.internal* by using the SSH tunnel (port 8157) you just created as a SOCKSv5 proxy instead of accessing those URLs directly. Setup details differ by browser. One good option for Firefox is using the FoxyProxy plugin, since you can configure it to only proxy those specific URLs, not any website you access. Read installation instructions here (scroll to the bottom).

Once your proxy is configured, access this URL directly from your web browser: http://ecs-########.amazonaws.com/ganglia and browse the statistics!

When finished, terminate the SSH connection (CTRL-C).

Advanced Topics - Watch the show with Hadoop web interface

Hadoop provides a web interface that is separate from the general Amazon Web Services interface. (i.e. it works even if you run Hadoop on your own personal cluster, instead of Amazon's). To access it, complete the same SSH tunnel and proxy setup as needed for Ganglia above. Then, access the following URLs for different Hadoop services:

- Hadoop Map/Reduce Administration (aka the "JobTracker"): http://ecs-########.amazonaws.com:9100

- Here you can see all submitted (current and past) MapReduce jobs, watch their execution progress, and access log files to view status and error messages.

- Hadoop NameNode: http://ecs-########.amazonaws.com:9101 (this storage mechanism is not used for your projects, so it's supposed to be empty)

Advanced Topics - FAQs from Prior Projects

Q: Can I get a single output file?

A: Yes! To get a single output file at the end of processing, you need to run only *1* reducer across the entire cluster. This is a bottleneck for large datasets, but it might be worth it compared to the alternative (writing a simple program that merges your N sorted output files into 1 sorted output file).

Try inserting the following two lines of code immediately before the line "JobClient.runJob(conf)". Any earlier than that, and it appears that it was overwritten by some Hadoop logic that was trying to auto-size your job for performance. Also, those lines are probably redundant, since they should set the same internal variable.

conf.setNumReduceTasks(1);

conf.set("mapred.reduce.tasks", "1");

JobClient.runJob(conf);

Q: Can I change the output data type of a map task, i.e. changing a key/value pair such that the "value" is a string, not an int?

A: Yes! A discussion at StackOverflow notes that you need to change the type in three different places:

- the map task

- the reduce task

- and the output value (or key) class in main().

For this example, the original poster wanted to change from type Int (IntWriteable) to Double (Doublewritable). You should be able to follow the same method to change from Long (LongWritable) to Text (TextWriteable)

Q: Can I run an external program from inside Java?

A: Yes! There are a few examples online that cover invoking an external binary, sending it data (via stdin), and capturing its output (via stdout) from Java.

http://www.linglom.com/2007/06/06/how-to-run-command-line-or-execute-external-application-from-java/

http://www.rgagnon.com/javadetails/java-0014.html

In fact, Hadoop even has a "Shell" class for this purpose: http://stackoverflow.com/questions/7291232/running-hadoop-mapreduce-is-it-possible-to-call-external-executables-outside-of

Q: Can I use a Bootstrapping action (when booting the EC2 node via Elastic MapReduce) to access files in S3?

A: Yes!

Amazon provides an example bootstrap script at http://aws.amazon.com/articles/3938 (about halfway down the page). In the example, they use the 'wget' utility to download an example .tar.gz archive and uncompress it. Note, however, that while the download file is in S3, it is a PUBLIC file, allowing wget to work in HTTP mode. (You can mark files as public via the S3 web interface).

A second example is posted at http://aws.typepad.com/aws/2010/04/new-elastic-mapreduce-feature-bootstrap-actions.html This uses the command hadoop fs -copyToLocal to copy from S3 to the local file system. This *should* work even if your S3 files aren't marked as public. (After all, if Hadoop hasn't been already configured by Amazon with your correct key, there's no way for it to load the .jar file with your MapReduce program to execute).

Cleaning Up

Don't forget to shut down the EC2 servers you (implicitly) started when you created your job flow!

./elastic-mapreduce --list

./elastic-mapreduce --terminate [job flow ID]

S3 charges you per gigabyte of storage used per month. Be tidy with your output files, and when your projects are finished, delete them when finished!

References

- Original tutorial

- .zip snapshot of tutorial code on 3/11/2012. Does not include the bugfix to the the bucket name as described above. When possible, pull direct from GitHub!

- Amazon Elastic MapReduce Ruby Client - with examples!

- Amazon Elastic MapReduce guide - with step-by-step examples!

- Amazon Ganglia guide

- Amazon Hadoop Web Interface guide